Two-Bit History

Jekyll2024-12-08T16:46:53+00:00https://twobithistory.org/feed.xmlTwo-Bit HistoryA Jekyll blog about the history of computingHow the ARPANET Protocols Worked2021-03-08T00:00:00+00:002021-03-08T00:00:00+00:00https://twobithistory.org/2021/03/08/arpanet-protocolsThe ARPANET changed computing forever by proving that computers of wildly different manufacture could be connected using standardized protocols. In my post on the historical significance of the ARPANET, I mentioned a few of those protocols, but didn’t describe them in any detail. So I wanted to take a closer look at them. I also wanted to see how much of the design of those early protocols survives in the protocols we use today.

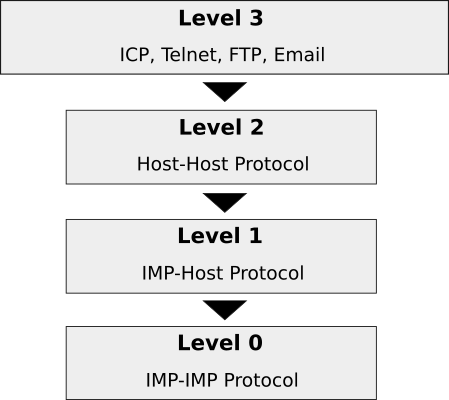

The ARPANET protocols were, like our modern internet protocols, organized into layers.1 The protocols in the higher layers ran on top of the protocols in the lower layers. Today the TCP/IP suite has five layers (the Physical, Link, Network, Transport, and Application layers), but the ARPANET had only three layers—or possibly four, depending on how you count them.

I’m going to explain how each of these layers worked, but first an aside about who built what in the ARPANET, which you need to know to understand why the layers were divided up as they were.

Some Quick Historical Context

The ARPANET was funded by the US federal government, specifically the Advanced Research Projects Agency within the Department of Defense (hence the name “ARPANET”). The US government did not directly build the network; instead, it contracted the work out to a Boston-based consulting firm called Bolt, Beranek, and Newman, more commonly known as BBN.

BBN, in turn, handled many of the responsibilities for implementing the network but not all of them. What BBN did was design and maintain a machine known as the Interface Message Processor, or IMP. The IMP was a customized Honeywell minicomputer, one of which was delivered to each site across the country that was to be connected to the ARPANET. The IMP served as a gateway to the ARPANET for up to four hosts at each host site. It was basically a router. BBN controlled the software running on the IMPs that forwarded packets from IMP to IMP, but the firm had no direct control over the machines that would connect to the IMPs and become the actual hosts on the ARPANET.

The host machines were controlled by the computer scientists that were the end users of the network. These computer scientists, at host sites across the country, were responsible for writing the software that would allow the hosts to talk to each other. The IMPs gave hosts the ability to send messages to each other, but that was not much use unless the hosts agreed on a format to use for the messages. To solve that problem, a motley crew consisting in large part of graduate students from the various host sites formed themselves into the Network Working Group, which sought to specify protocols for the host computers to use.

So if you imagine a single successful network interaction over the ARPANET, (sending an email, say), some bits of engineering that made the interaction successful were the responsibility of one set of people (BBN), while other bits of engineering were the responsibility of another set of people (the Network Working Group and the engineers at each host site). That organizational and logistical happenstance probably played a big role in motivating the layered approach used for protocols on the ARPANET, which in turn influenced the layered approach used for TCP/IP.

Okay, Back to the Protocols

The ARPANET protocol hierarchy.

The ARPANET protocol hierarchy.

The protocol layers were organized into a hierarchy. At the very bottom was “level 0.”2 This is the layer that in some sense doesn’t count, because on the ARPANET this layer was controlled entirely by BBN, so there was no need for a standard protocol. Level 0 governed how data passed between the IMPs. Inside of BBN, there were rules governing how IMPs did this; outside of BBN, the IMP sub-network was a black box that just passed on any data that you gave it. So level 0 was a layer without a real protocol, in the sense of a publicly known and agreed-upon set of rules, and its existence could be ignored by software running on the ARPANET hosts. Loosely speaking, it handled everything that falls under the Physical, Link, and Internet layers of the TCP/IP suite today, and even quite a lot of the Transport layer, which is something I’ll come back to at the end of this post.

The “level 1” layer established the interface between the ARPANET hosts and the IMPs they were connected to. It was an API, if you like, for the black box level 0 that BBN had built. It was also referred to at the time as the IMP-Host Protocol. This protocol had to be written and published because, when the ARPANET was first being set up, each host site had to write its own software to interface with the IMP. They wouldn’t have known how to do that unless BBN gave them some guidance.

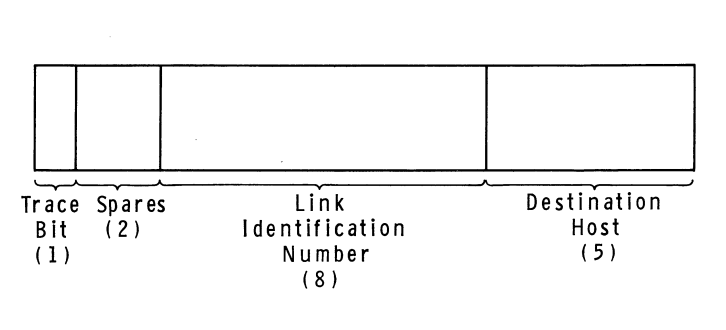

The IMP-Host Protocol was specified by BBN in a lengthy document called [BBN

Report 1822](https://walden-family.com/impcode/BBN1822_Jan1976.pdf). The

document was revised many times as the ARPANET evolved; what I’m going to

describe here is roughly the way the IMP-Host protocol worked as it was

initially designed. According to BBN’s rules, hosts could pass messages to

their IMPs no longer than 8095 bits, and each message had a leader that

included the destination host number and something called a link number.3

The IMP would examine the designation host number and then dutifully forward

the message into the network. When messages were received from a remote host,

the receiving IMP would replace the destination host number with the source

host number before passing it on to the local host. Messages were not actually

what passed between the IMPs themselves—the IMPs broke the messages down into

smaller packets for transfer over the network—but that detail was hidden from

the hosts.

The Host-IMP message leader format, as of 1969. Diagram from BBN Report_

_1763.

The Host-IMP message leader format, as of 1969. Diagram from BBN Report_

_1763.

The link number, which could be any number from 0 to 255, served two purposes. It was used by higher level protocols to establish more than one channel of communication between any two hosts on the network, since it was conceivable that there might be more than one local user talking to the same destination host at any given time. (In other words, the link numbers allowed communication to be multiplexed between hosts.) But it was also used at the level 1 layer to control the amount of traffic that could be sent between hosts, which was necessary to prevent faster computers from overwhelming slower ones. As initially designed, the IMP-Host Protocol limited each host to sending just one message at a time over each link. Once a given host had sent a message along a link to a remote host, it would have to wait to receive a special kind of message called an RFNM (Request for Next Message) from the remote IMP before sending the next message along the same link. Later revisions to this system, made to improve performance, allowed a host to have up to eight messages in transit to another host at a given time.4

The “level 2” layer is where things really start to get interesting, because it

was this layer and the one above it that BBN and the Department of Defense left

entirely to the academics and the Network Working Group to invent for

themselves. The level 2 layer comprised the Host-Host Protocol, which was first

sketched in RFC 9 and first officially specified by RFC 54. A more readable

explanation of the Host-Host Protocol is given in the [ARPANET Protocol

Handbook](http://mercury.lcs.mit.edu/~jnc/tech/arpaprot.html).

The Host-Host Protocol governed how hosts created and managed connections with each other. A connection was a one-way data pipeline between a write socket on one host and a read socket on another host. The “socket” concept was introduced on top of the limited level-1 link facility (remember that the link number can only be one of 256 values) to give programs a way of addressing a particular process running on a remote host. Read sockets were even-numbered while write sockets were odd-numbered; whether a socket was a read socket or a write socket was referred to as the socket’s gender. There were no “port numbers” like in TCP. Connections could be opened, manipulated, and closed by specially formatted Host-Host control messages sent between hosts using link 0, which was reserved for that purpose. Once control messages were exchanged over link 0 to establish a connection, further data messages could then be sent using another link number picked by the receiver.

Host-Host control messages were identified by a three-letter mnemonic. A connection was established when two hosts exchanged a STR (sender-to-receiver) message and a matching RTS (receiver-to-sender) message—these control messages were both known as Request for Connection messages. Connections could be closed by the CLS (close) control message. There were further control messages that changed the rate at which data messages were sent from sender to receiver, which were needed to ensure again that faster hosts did not overwhelm slower hosts. The flow control already provided by the level 1 protocol was apparently not sufficient at level 2; I suspect this was because receiving an RFNM from a remote IMP was only a guarantee that the remote IMP had passed the message on to the destination host, not that the host had fully processed the message. There was also an INR (interrupt-by-receiver) control message and an INS (interrupt-by-sender) control message that were primarily for use by higher-level protocols.

The higher-level protocols all lived in “level 3”, which was the Application layer of the ARPANET. The Telnet protocol, which provided a virtual teletype connection to another host, was perhaps the most important of these protocols, but there were many others in this level too, such as FTP for transferring files and various experiments with protocols for sending email.

One protocol in this level was not like the others: the Initial Connection Protocol (ICP). ICP was considered to be a level-3 protocol, but really it was a kind of level-2.5 protocol, since other level-3 protocols depended on it. ICP was needed because the connections provided by the Host-Host Protocol at level 2 were only one-way, but most applications required a two-way (i.e. full-duplex) connection to do anything interesting. ICP specified a two-step process whereby a client running on one host could connect to a long-running server process on another host. The first step involved establishing a one-way connection from the server to the client using the server process’ well-known socket number. The server would then send a new socket number to the client over the established connection. At that point, the existing connection would be discarded and two new connections would be opened, a read connection based on the transmitted socket number and a write connection based on the transmitted socket number plus one. This little dance was a necessary prelude to most things—it was the first step in establishing a Telnet connection, for example.

That finishes our ascent of the ARPANET protocol hierarchy. You may have been

expecting me to mention a “Network Control Protocol” at some point. Before I

sat down to do research for this post and my last one, I definitely thought

that the ARPANET ran on a protocol called NCP. The acronym is occasionally used

to refer to the ARPANET protocols as a whole, which might be why I had that

idea. RFC 801, for example, talks about

transitioning the ARPANET from “NCP” to “TCP” in a way that makes it sound like

NCP is an ARPANET protocol equivalent to TCP. But there has never been a

“Network Control Protocol” for the ARPANET (even if [Encyclopedia Britannica

thinks so](https://www.britannica.com/topic/ARPANET)), and I suspect people

have mistakenly unpacked “NCP” as “Network Control Protocol” when really it

stands for “Network Control Program.” The Network Control Program was the

kernel-level program running in each host responsible for handling network

communication, equivalent to the TCP/IP stack in an operating system today.

“NCP”, as it’s used in RFC 801, is a metonym, not a protocol.

A Comparison with TCP/IP

The ARPANET protocols were all later supplanted by the TCP/IP protocols (with the exception of Telnet and FTP, which were easily adapted to run on top of TCP). Whereas the ARPANET protocols were all based on the assumption that the network was built and administered by a single entity (BBN), the TCP/IP protocol suite was designed for an inter-net, a network of networks where everything would be more fluid and unreliable. That led to some of the more immediately obvious differences between our modern protocol suite and the ARPANET protocols, such as how we now distinguish between a Network layer and a Transport layer. The Transport layer-like functionality that in the ARPANET was partly implemented by the IMPs is now the sole responsibility of the hosts at the network edge.

What I find most interesting about the ARPANET protocols though is how so much of the transport-layer functionality now in TCP went through a janky adolescence on the ARPANET. I’m not a networking expert, so I pulled out my college networks textbook (Kurose and Ross, let’s go), and they give a pretty great outline of what a transport layer is responsible for in general. To summarize their explanation, a transport layer protocol must minimally do the following things. Here segment is basically equivalent to message as the term was used on the ARPANET:

- Provide a delivery service between processes and not just host machines (transport layer multiplexing and demultiplexing)

- Provide integrity checking on a per-segment basis (i.e. make sure there is no data corruption in transit)

A transport layer could also, like TCP does, provide reliable data transfer, which means:

- Segments are delivered in order

- No segments go missing

- Segments aren’t delivered so fast that they get dropped by the receiver (flow control)

It seems like there was some confusion on the ARPANET about how to do multiplexing and demultiplexing so that processes could communicate—BBN introduced the link number to do that at the IMP-Host level, but it turned out that socket numbers were necessary at the Host-Host level on top of that anyway. Then the link number was just used for flow control at the IMP-Host level, but BBN seems to have later abandoned that in favor of doing flow control between unique pairs of hosts, meaning that the link number started out as this overloaded thing only to basically became vestigial. TCP now uses port numbers instead, doing flow control over each TCP connection separately. The process-process multiplexing and demultiplexing lives entirely inside TCP and does not leak into a lower layer like on the ARPANET.

It’s also interesting to see, in light of how Kurose and Ross develop the ideas behind TCP, that the ARPANET started out with what Kurose and Ross would call a strict “stop-and-wait” approach to reliable data transfer at the IMP-Host level. The “stop-and-wait” approach is to transmit a segment and then refuse to transmit any more segments until an acknowledgment for the most recently transmitted segment has been received. It’s a simple approach, but it means that only one segment is ever in flight across the network, making for a very slow protocol—which is why Kurose and Ross present “stop-and-wait” as merely a stepping stone on the way to a fully featured transport layer protocol. On the ARPANET, “stop-and-wait” was how things worked for a while, since, at the IMP-Host level, a Request for Next Message had to be received in response to every outgoing message before any further messages could be sent. To be fair to BBN, they at first thought this would be necessary to provide flow control between hosts, so the slowdown was intentional. As I’ve already mentioned, the RFNM requirement was later relaxed for the sake of better performance, and the IMPs started attaching sequence numbers to messages and keeping track of a “window” of messages in flight in the more or less the same way that TCP implementations do today.5

So the ARPANET showed that communication between heterogeneous computing systems is possible if you get everyone to agree on some baseline rules. That is, as I’ve previously argued, the ARPANET’s most important legacy. But what I hope this closer look at those baseline rules has revealed is just how much the ARPANET protocols also influenced the protocols we use today. There was certainly a lot of awkwardness in the way that transport-layer responsibilities were shared between the hosts and the IMPs, sometimes redundantly. And it’s really almost funny in retrospect that hosts could at first only send each other a single message at a time over any given link. But the ARPANET experiment was a unique opportunity to learn those lessons by actually building and operating a network, and it seems those lessons were put to good use when it came time to upgrade to the internet as we know it today.

If you enjoyed this post, more like it come out every four weeks! Follow @TwoBitHistory on Twitter or subscribe to the RSS feed to make sure you know when a new post is out.

Previously on TwoBitHistory…

Trying to get back on this horse!

My latest post is my take (surprising and clever, of course) on why the ARPANET was such an important breakthrough, with a fun focus on the conference where the ARPANET was shown off for the first time: https://t.co/8SRY39c3St

— TwoBitHistory (@TwoBitHistory) February 7, 2021

]]>The Real Novelty of the ARPANET2021-02-07T00:00:00+00:002021-02-07T00:00:00+00:00https://twobithistory.org/2021/02/07/arpanetIf you run an image search for the word “ARPANET,” you will find lots of maps

showing how the [government research

network](https://en.wikipedia.org/wiki/ARPANET) expanded steadily across the

country throughout the late ’60s and early ’70s. I’m guessing that most people

reading or hearing about the ARPANET for the first time encounter one of these

maps.

Obviously, the maps are interesting—it’s hard to believe that there were once so few networked computers that their locations could all be conveyed with what is really pretty lo-fi cartography. (We’re talking 1960s overhead projector diagrams here. You know the vibe.) But the problem with the maps, drawn as they are with bold lines stretching across the continent, is that they reinforce the idea that the ARPANET’s paramount achievement was connecting computers across the vast distances of the United States for the first time.

Today, the internet is a lifeline that keeps us tethered to each other even as an airborne virus has us all locked up indoors. So it’s easy to imagine that, if the ARPANET was the first draft of the internet, then surely the world that existed before it was entirely disconnected, since that’s where we’d be without the internet today, right? The ARPANET must have been a big deal because it connected people via computers when that hadn’t before been possible.

That view doesn’t get the history quite right. It also undersells what made the ARPANET such a breakthrough.

The Debut

The Washington Hilton stands near the top of a small rise about a mile and a half northeast of the National Mall. Its two white-painted modern facades sweep out in broad semicircles like the wings of a bird. The New York Times, reporting on the hotel’s completion in 1965, remarked that the building looks “like a sea gull perched on a hilltop nest.”1

The hotel hides its most famous feature below ground. Underneath the driveway roundabout is an enormous ovoid event space known as the International Ballroom, which was for many years the largest pillar-less ballroom in DC. In 1967, the Doors played a concert there. In 1968, Jimi Hendrix also played a concert there. In 1972, a somewhat more sedate act took over the ballroom to put on the inaugural International Conference on Computing Communication, where a promising research project known as the ARPANET was demonstrated publicly for the first time.

The 1972 ICCC, which took place from October 24th to 26th, was attended by about 800 people.2 It brought together all of the leading researchers in the nascent field of computer networking. According to internet pioneer Bob Kahn, “if somebody had dropped a bomb on the Washington Hilton, it would have destroyed almost all of the networking community in the US at that point.”3

Not all of the attendees were computer scientists, however. An advertisement for the conference claimed it would be “user-focused” and geared toward “lawyers, medical men, economists, and government men as well as engineers and communicators.”4 Some of the conference’s sessions were highly technical, such as the session titled “Data Network Design Problems I” and its sequel session, “Data Network Design Problems II.” But most of the sessions were, as promised, focused on the potential social and economic impacts of computer networking. One session, eerily prescient today, sought to foster a discussion about how the legal system could act proactively “to safeguard the right of privacy in the computer data bank.”5

The ARPANET demonstration was intended as a side attraction of sorts for the attendees. Between sessions, which were held either in the International Ballroom or elsewhere on the lower level of the hotel, attendees were free to wander into the Georgetown Ballroom (a smaller ballroom/conference room down the hall from the big one),6 where there were 40 terminals from a variety of manufacturers set up to access the ARPANET.7 These terminals were dumb terminals—they only handled input and output and could do no computation on their own. (In fact, in 1972, it’s likely that all of these terminals were hardcopy terminals, i.e. teletype machines.) The terminals were all hooked up to a computer known as a Terminal Interface Message Processor or TIP, which sat on a raised platform in the middle of the room. The TIP was a kind of archaic router specially designed to connect dumb terminals to the ARPANET. Using the terminals and the TIP, the ICCC attendees could experiment with logging on and accessing some of the computers at the 29 host sites then comprising the ARPANET.8

To exhibit the network’s capabilities, researchers at the host sites across

the country had collaborated to prepare 19 simple “scenarios” for users to

experiment with. These scenarios were compiled into [a

booklet](https://archive.computerhistory.org/resources/access/text/2019/07/102784024-05-001-acc.pdf)

that was handed to conference attendees as they tentatively approached the maze

of wiring and terminals.9 The scenarios were meant to prove that the

new technology worked but also that it was useful, because so far the ARPANET

was “a highway system without cars,” and its Pentagon funders hoped that a

public demonstration would excite more interest in the network.10

The scenarios thus showed off a diverse selection of the software that could be accessed over the ARPANET: There were programming language interpreters, one for a Lisp-based language at MIT and another for a numerical computing environment called Speakeasy hosted at UCLA; there were games, including a chess program and an implementation of Conway’s Game of Life; and—perhaps most popular among the conference attendees—there were several AI chat programs, including the famous ELIZA chat program developed at MIT by Joseph Weizenbaum.

The researchers who had prepared the scenarios were careful to list each command that users were expected to enter at their terminals. This was especially important because the sequence of commands used to connect to any given ARPANET host could vary depending on the host in question. To experiment with the AI chess program hosted on the MIT Artificial Intelligence Laboratory’s PDP-10 minicomputer, for instance, conference attendees were instructed to enter the following:

[LF], [SP], and [CR] below stand for the line feed, space,

and carriage return keys respectively. I’ve explained each command after //,

but this syntax was not used for the annotations in the original.

@r [LF] // Reset the TIP

@e [SP] r [LF] // "Echo remote" setting, host echoes characters rather than TIP

@L [SP] 134 [LF] // Connect to host number 134

:login [SP] iccXXX [CR] // Login to the MIT AI Lab's system, where "XXX" should be user's initials

:chess [CR] // Start chess program

If conference attendees were successfully able to enter those commands, their reward was the opportunity to play around with some of the most cutting-edge chess software available at the time, where the layout of the board was represented like this:

BR BN BB BQ BK BB BN BR

BP BP BP BP ** BP BP BP

-- ** -- ** -- ** -- **

** -- ** -- BP -- ** --

-- ** -- ** WP ** -- **

** -- ** -- ** -- ** --

WP WP WP WP -- WP WP WP

WR WN WB WQ WK WB WN WR

In contrast, to connect to UCLA’s IBM System/360 and run the Speakeasy numerical computing environment, conference attendees had to enter the following:

@r [LF] // Reset the TIP

@t [SP] o [SP] L [LF] // "Transmit on line feed" setting

@i [SP] L [LF] // "Insert line feed" setting, i.e. send line feed with each carriage return

@L [SP] 65 [LF] // Connect to host number 65

tso // Connect to IBM Time-Sharing Option system

logon [SP] icX [CR] // Log in with username, where "X" should be a freely chosen digit

iccc [CR] // This is the password (so secure!)

speakez [CR] // Start Speakeasy

Successfully running that gauntlet gave attendees the power to multiply and transpose and do other operations on matrices as quickly as they could input them at their terminal:

:+! a=m*transpose(m);a [CR]

:+! eigenvals(a) [CR]

Many of the attendees were impressed by the demonstration, but not for the reasons that we, from our present-day vantage point, might assume. The key piece of context hard to keep in mind today is that, in 1972, being able to use a computer remotely, even from a different city, was not new. Teletype devices had been used to talk to distant computers for decades already. Almost a full five years before the ICCC, Bill Gates was in a Seattle high school using a teletype to run his first BASIC programs on a General Electric computer housed elsewhere in the city. Merely logging in to a host computer and running a few commands or playing a text-based game was routine. The software on display here was pretty neat, but the two scenarios I’ve told you about so far could ostensibly have been experienced without going over the ARPANET.

Of course, something new was happening under the hood. The lawyers, policy-makers, and economists at the ICCC might have been enamored with the clever chess program and the chat bots, but the networking experts would have been more interested in two other scenarios that did a better job of demonstrating what the ARPANET project had achieved.

The first of these scenarios involved a program called NETWRK running on

MIT’s ITS operating system. The NETWRK command was the entrypoint for several

subcommands that could report various aspects of the ARPANET’s operating

status. The SURVEY subcommand reported which hosts on the network were

functioning and available (they all fit on a single list), while the

SUMMARY.OF.SURVEY subcommand aggregated the results of past SURVEY runs to

report an “up percentage” for each host as well as how long, on average, it

took for each host to respond to messages. The output of the

SUMMARY.OF.SURVEY subcommand was a table that looked like this:

--HOST-- -#- -%-UP- -RESP-

UCLA-NMC 001 097% 00.80

SRI-ARC 002 068% 01.23

UCSB-75 003 059% 00.63

...

The host number field, as you can see, has room for no more than three digits

(ha!). Other NETWRK subcommands allowed users to look at summary of survey

results over a longer historical period or to examine the log of survey results

for a single host.

The second of these scenarios featured a piece of software called the SRI-ARC

Online System being developed at Stanford. This was a fancy piece of software

with lots of functionality (it was the software system that Douglas Engelbart

demoed in the “Mother of All Demos”), but one of the many things it could do

was make use of what was essentially a file hosting service run on the host at

UC Santa Barbara. From a terminal at the Washington Hilton, conference

attendees could copy a file created at Stanford onto the host at UCSB simply by

running a copy command and answering a few of the computer’s questions:

[ESC], [SP], and [CR] below stand for the escape, space, and carriage

return keys respectively. The words in parentheses are prompts printed by the

computer. The escape key is used to autocomplete the filename on the third

line. The file being copied here is called <system>sample.txt;1, where the

trailing one indicates the file’s version number and <system> indicates the

directory. This was a convention for filenames used by the TENEX operating

system. 11

@copy

(TO/FROM UCSB) to

(FILE) <system>sample [ESC] .TXT;1 [CR]

(CREATE/REPLACE) create

These two scenarios might not look all that different from the first two, but

they were remarkable. They were remarkable because they made it clear that, on

the ARPANET, humans could talk to computers but computers could also talk to

each other. The SURVEY results collected at MIT weren’t collected by a

human regularly logging in to each machine to check if it was up—they were

collected by a program that knew how to talk to the other machines on the

network. Likewise, the file transfer from Stanford to UCSB didn’t involve any

humans sitting at terminals at either Stanford or UCSB—the user at a terminal

in Washington DC was able to get the two computers to talk each other merely by

invoking a piece of software. Even more, it didn’t matter which of the 40

terminals in the Ballroom you were sitting at, because you could view the MIT

network monitoring statistics or store files at UCSB using any of the terminals

with almost the same sequence of commands.

This is what was totally new about the ARPANET. The ICCC demonstration didn’t just involve a human communicating with a distant computer. It wasn’t just a demonstration of remote I/O. It was a demonstration of software remotely communicating with other software, something nobody had seen before.

To really appreciate why it was this aspect of the ARPANET project that was important and not the wires-across-the-country, physical connection thing that the host maps suggest (the wires were leased phone lines anyhow and were already there!), consider that, before the ARPANET project began in 1966, the ARPA offices in the Pentagon had a terminal room. Inside it were three terminals. Each connected to a different computer; one computer was at MIT, one was at UC Berkeley, and another was in Santa Monica.12 It was convenient for the ARPA staff that they could use these three computers even from Washington DC. But what was inconvenient for them was that they had to buy and maintain terminals from three different manufacturers, remember three different login procedures, and familiarize themselves with three different computing environments in order to use the computers. The terminals might have been right next to each other, but they were merely extensions of the host computing systems on the other end of the wire and operated as differently as the computers did. Communicating with a distant computer was possible before the ARPANET; the problem was that the heterogeneity of computing systems limited how sophisticated the communication could be.

Come Together, Right Now

So what I’m trying to drive home here is that there is an important distinction between statement A, “the ARPANET connected people in different locations via computers for the first time,” and statement B, “the ARPANET connected computer systems to each other for the first time.” That might seem like splitting hairs, but statement A elides some illuminating history in a way that statement B does not.

To begin with, the historian Joy Lisi Rankin has shown that people were socializing in cyberspace well before the ARPANET came along. In A People’s History of Computing in the United States, she describes several different digital communities that existed across the country on time-sharing networks prior to or apart from the ARPANET. These time-sharing networks were not, technically speaking, computer networks, since they consisted of a single mainframe computer running computations in a basement somewhere for many dumb terminals, like some portly chthonic creature with tentacles sprawling across the country. But they nevertheless enabled most of the social behavior now connoted by the word “network” in a post-Facebook world. For example, on the Kiewit Network, which was an extension of the Dartmouth Time-Sharing System to colleges and high schools across the Northeast, high school students collaboratively maintained a “gossip file” that allowed them to keep track of the exciting goings-on at other schools, “creating social connections from Connecticut to Maine.”13 Meanwhile, women at Mount Holyoke College corresponded with men at Dartmouth over the network, perhaps to arrange dates or keep in touch with boyfriends.14 This was all happening in the 1960s. Rankin argues that by ignoring these early time-sharing networks we impoverish our understanding of how American digital culture developed over the last 50 years, leaving room for a “Silicon Valley mythology” that credits everything to the individual genius of a select few founding fathers.

As for the ARPANET itself, if we recognize that the key challenge was connecting the computer systems and not just the physical computers, then that might change what we choose to emphasize when we tell the story of the innovations that made the ARPANET possible. The ARPANET was the first ever packet-switched network, and lots of impressive engineering went into making that happen. I think it’s a mistake, though, to say that the ARPANET was a breakthrough because it was the first packet-switched network and then leave it at that. The ARPANET was meant to make it easier for computer scientists across the country to collaborate; that project was as much about figuring out how different operating systems and programs written in different languages would interface with each other than it was about figuring out how to efficiently ferry data back and forth between Massachusetts and California. So the ARPANET was the first packet-switched network, but it was also an amazing standards success story—something I find especially interesting given how many times I’ve written about failed standards on this blog.

Inventing the protocols for the ARPANET was an afterthought even at the time, so naturally the job fell to a group made up largely of graduate students. This group, later known as the Network Working Group, met for the first time at UC Santa Barbara in August of 1968.15 There were 12 people present at that first meeting, most of whom were representatives from the four universities that were to be the first host sites on the ARPANET when the equipment was ready.16 Steve Crocker, then a graduate student at UCLA, attended; he told me over a Zoom call that it was all young guys at that first meeting, and that Elmer Shapiro, who chaired the meeting, was probably the oldest one there at around 38. ARPA had not put anyone in charge of figuring out how the computers would communicate once they were connected, but it was obvious that some coordination was necessary. As the group continued to meet, Crocker kept expecting some “legitimate adult” with more experience and authority to fly out from the East Coast to take over, but that never happened. The Network Working Group had ARPA’s tacit approval—all those meetings involved lots of long road trips, and ARPA money covered the travel expenses—so they were it.17

The Network Working Group faced a huge challenge. Nobody had ever sat down to connect computer systems together in a general-purpose way; that flew against all of the assumptions that prevailed in computing in the late 1960s:

The typical mainframe of the period behaved as if it were the only computer in the universe. There was no obvious or easy way to engage two diverse machines in even the minimal communication needed to move bits back and forth. You could connect machines, but once connected, what would they say to each other? In those days a computer interacted with devices that were attached to it, like a monarch communicating with his subjects. Everything connected to the main computer performed a specific task, and each peripheral device was presumed to be ready at all times for a fetch-my-slippers type command…. Computers were strictly designed for this kind of interaction; they send instructions to subordinate card readers, terminals, and tape units, and they initiate all dialogues. But if another device in effect tapped the computer on the shoulder with a signal and said, “Hi, I’m a computer too,” the receiving machine would be stumped.18

As a result, the Network Working Group’s progress was initially slow.19 The group did not settle on an “official” specification for any protocol until June, 1970, nearly two years after the group’s first meeting.20

But by the time the ARPANET was to be shown off at the 1972 ICCC, all the key

protocols were in place. A scenario like the chess scenario exercised many of

them. When a user ran the command @e r, short for @echo remote, that

instructed the TIP to make use of a facility in the new TELNET virtual teletype

protocol to inform the remote host that it should echo the user’s input. When a

user then ran the command @L 134, short for @login 134, that caused the TIP

to invoke the Initial Connection Protocol with host 134, which in turn would

cause the remote host to allocate all the necessary resources for the

connection and drop the user into a TELNET session. (The file transfer scenario

I described may well have made use of the File Transfer Protocol, though that

protocol was only ready shortly before the conference.21) All of these

protocols were known as “level three” protocols, and below them were the

host-to-host protocol at level two (which defined the basic format for the

messages the hosts should expect from each other), and the host-to-IMP protocol

at level one (which defined how hosts communicated with the routing equipment

they were linked to). Incredibly, the protocols all worked.

In my view, the Network Working Group was able to get everything together in time and just generally excel at its task because it adopted an open and informal approach to standardization, as exemplified by the famous Request for Comments (RFC) series of documents. These documents, originally circulated among the members of the Network Working Group by snail mail, were a way of keeping in touch between meetings and soliciting feedback to ideas. The “Request for Comments” framing was suggested by Steve Crocker, who authored the first RFC and supervised the RFC mailing list in the early years, in an attempt to emphasize the open-ended and collaborative nature of what the group was trying to do. That framing, and the availability of the documents themselves, made the protocol design process into a melting pot of contributions and riffs on other people’s contributions where the best ideas could emerge without anyone losing face. The RFC process was a smashing success and is still used to specify internet standards today, half a century later.

It’s this legacy of the Network Working Group that I think we should highlight when we talk about ARPANET’s impact. Though today one of the most magical things about the internet is that it can connect us with people on the other side of the planet, it’s only slightly facetious to say that that technology has been with us since the 19th century. Physical distance was conquered well before the ARPANET by the telegraph. The kind of distance conquered by the ARPANET was instead the logical distance between the operating systems, character codes, programming languages, and organizational policies employed at each host site. Implementing the first packet-switched network was of course a major feat of engineering that should also be mentioned, but the problem of agreeing on standards to connect computers that had never been designed to play nice with each other was the harder of the two big problems involved in building the ARPANET—and its solution was the most miraculous part of the ARPANET story.

In 1981, ARPA issued a “Completion Report” reviewing the first decade of the ARPANET’s history. In a section with the belabored title, “Technical Aspects of the Effort Which Were Successful and Aspects of the Effort Which Did Not Materialize as Originally Envisaged,” the authors wrote:

Possibly the most difficult task undertaken in the development of the ARPANET was the attempt—which proved successful—to make a number of independent host computer systems of varying manufacture, and varying operating systems within a single manufactured type, communicate with each other despite their diverse characteristics.22

There you have it from no less a source than the federal government of the United States.

If you enjoyed this post, more like it come out every four weeks! Follow @TwoBitHistory on Twitter or subscribe to the RSS feed to make sure you know when a new post is out.

Previously on TwoBitHistory…

It's been too long, I know, but I finally got around to writing a new post. This one is about how REST APIs should really be known as FIOH APIs instead (Fuck It, Overload HTTP): https://t.co/xjMZVZgsEz

— TwoBitHistory (@TwoBitHistory) June 28, 2020

]]>Roy Fielding’s Misappropriated REST Dissertation2020-06-28T00:00:00+00:002020-06-28T00:00:00+00:00https://twobithistory.org/2020/06/28/restRESTful APIs are everywhere. This is funny, because how many people really know what “RESTful” is supposed to mean?

I think most of us can empathize with [this Hacker News

poster](https://news.ycombinator.com/item?id=7201871):

I’ve read several articles about REST, even a bit of the original paper. But I still have quite a vague idea about what it is. I’m beginning to think that nobody knows, that it’s simply a very poorly defined concept.

I had planned to write a blog post exploring how REST came to be such a

dominant paradigm for communication across the internet. I started my research

by reading [Roy Fielding’s 2000

dissertation](https://www.ics.uci.edu/~fielding/pubs/dissertation/fielding_dissertation_2up.pdf),

which introduced REST to the world. After reading Fielding’s dissertation, I

realized that the much more interesting story here is how Fielding’s ideas came

to be so widely misunderstood.

Many more people know that Fielding’s dissertation is where REST came from than have read the dissertation (fair enough), so misconceptions about what the dissertation actually contains are pervasive.

The biggest of these misconceptions is that the dissertation directly addresses the problem of building APIs. I had always assumed, as I imagine many people do, that REST was intended from the get-go as an architectural model for web APIs built on top of HTTP. I thought perhaps that there had been some chaotic experimental period where people were building APIs on top of HTTP all wrong, and then Fielding came along and presented REST as the sane way to do things. But the timeline doesn’t make sense here: APIs for web services, in the sense that we know them today, weren’t a thing until a few years after Fielding published his dissertation.

Fielding’s dissertation (titled “Architectural Styles and the Design of

Network-based Software Architectures”) is not about how to build APIs on top of

HTTP but rather about HTTP itself. Fielding contributed to the HTTP/1.0

specification and co-authored the HTTP/1.1 specification, which was published

in 1999. He was interested in the architectural lessons that could be drawn

from the design of the HTTP protocol; his dissertation presents REST as a

distillation of the architectural principles that guided the standardization

process for HTTP/1.1. Fielding used these principles to make decisions about

which proposals to incorporate into HTTP/1.1. For example, he rejected a

proposal to batch requests using new MGET and MHEAD methods because he felt

the proposal violated the constraints prescribed by REST, especially the

constraint that messages in a REST system should be easy to proxy and

cache.1 So HTTP/1.1 was instead designed around persistent connections over

which multiple HTTP requests can be sent. (Fielding also felt that cookies are

not RESTful because they add state to what should be a stateless system, but

their usage was already entrenched.2) REST, for Fielding, was not a guide to

building HTTP-based systems but a guide to extending HTTP.

This isn’t to say that Fielding doesn’t think REST could be used to build other systems. It’s just that he assumes these other systems will also be “distributed hypermedia systems.” This is another misconception people have about REST: that it is a general architecture you can use for any kind of networked application. But you could sum up the part of the dissertation where Fielding introduces REST as, essentially, “Listen, we just designed HTTP, so if you also find yourself designing a distributed hypermedia system you should use this cool architecture we worked out called REST to make things easier.” It’s not obvious why Fielding thinks anyone would ever attempt to build such a thing given that the web already exists; perhaps in 2000 it seemed like there was room for more than one distributed hypermedia system in the world. Anyway, Fielding makes clear that REST is intended as a solution for the scalability and consistency problems that arise when trying to connect hypermedia across the internet, not as an architectural model for distributed applications in general.

We remember Fielding’s dissertation now as the dissertation that introduced REST, but really the dissertation is about how much one-size-fits-all software architectures suck, and how you can better pick a software architecture appropriate for your needs. Only a single chapter of the dissertation is devoted to REST itself; much of the word count is spent on a taxonomy of alternative architectural styles3 that one could use for networked applications. Among these is the Pipe-and-Filter (PF) style, inspired by Unix pipes, along with various refinements of the Client-Server style (CS), such as Layered-Client-Server (LCS), Client-Cache-Stateless-Server (C$SS), and Layered-Client-Cache-Stateless-Server (LC$SS). The acronyms get unwieldy but Fielding’s point is that you can mix and match constraints imposed by existing styles to derive new styles. REST gets derived this way and could instead have been called—but for obvious reasons was not—Uniform-Layered-Code-on-Demand-Client-Cache-Stateless-Server (ULCODC$SS). Fielding establishes this taxonomy to emphasize that different constraints are appropriate for different applications and that this last group of constraints were the ones he felt worked best for HTTP.

This is the deep, deep irony of REST’s ubiquity today. REST gets blindly used for all sorts of networked applications now, but Fielding originally offered REST as an illustration of how to derive a software architecture tailored to an individual application’s particular needs.

I struggle to understand how this happened, because Fielding is so explicit about the pitfalls of not letting form follow function. He warns, almost at the very beginning of the dissertation, that “design-by-buzzword is a common occurrence” brought on by a failure to properly appreciate software architecture.4 He picks up this theme again several pages later:

Some architectural styles are often portrayed as “silver bullet” solutions for all forms of software. However, a good designer should select a style that matches the needs of a particular problem being solved.5

REST itself is an especially poor “silver bullet” solution, because, as Fielding later points out, it incorporates trade-offs that may not be appropriate unless you are building a distributed hypermedia application:

REST is designed to be efficient for large-grain hypermedia data transfer, optimizing for the common case of the Web, but resulting in an interface that is not optimal for other forms of architectural interaction.6

Fielding came up with REST because the web posed a thorny problem of “anarchic

scalability,” by which Fielding means the need to connect documents in a

performant way across organizational and national boundaries. The constraints

that REST imposes were carefully chosen to solve this anarchic scalability

problem. Web service APIs that are public-facing have to deal with a similar

problem, so one can see why REST is relevant there. Yet today it would not be

at all surprising to find that an engineering team has built a backend using

REST even though the backend only talks to clients that the engineering team

has full control over. We have all become the architect in [this Monty Python

sketch](https://www.youtube.com/watch?v=vNoPJqm3DAY), who designs an apartment

building in the style of a slaughterhouse because slaughterhouses are the only

thing he has experience building. (Fielding uses a line from this sketch as an

epigraph for his dissertation: “Excuse me… did you say ‘knives’?”)

So, given that Fielding’s dissertation was all about avoiding silver bullet software architectures, how did REST become a de facto standard for web services of every kind?

My theory is that, in the mid-2000s, the people who were sick of SOAP and wanted to do something else needed their own four-letter acronym.

I’m only half-joking here. SOAP, or the Simple Object Access Protocol, is a verbose and complicated protocol that you cannot use without first understanding a bunch of interrelated XML specifications. Early web services offered APIs based on SOAP, but, as more and more APIs started being offered in the mid-2000s, software developers burned by SOAP’s complexity migrated away en masse.

Among this crowd, SOAP inspired contempt. Ruby-on-Rails dropped SOAP support in 2007, leading to this emblematic comment from Rails creator David Heinemeier Hansson: “We feel that SOAP is overly complicated. It’s been taken over by the enterprise people, and when that happens, usually nothing good comes of it.”7 The “enterprise people” wanted everything to be formally specified, but the get-shit-done crowd saw that as a waste of time.

If the get-shit-done crowd wasn’t going to use SOAP, they still needed some standard way of doing things. Since everyone was using HTTP, and since everyone would keep using HTTP at least as a transport layer because of all the proxying and caching support, the simplest possible thing to do was just rely on HTTP’s existing semantics. So that’s what they did. They could have called their approach Fuck It, Overload HTTP (FIOH), and that would have been an accurate name, as anyone who has ever tried to decide what HTTP status code to return for a business logic error can attest. But that would have seemed recklessly blasé next to all the formal specification work that went into SOAP.

Luckily, there was this dissertation out there, written by a co-author of the HTTP/1.1 specification, that had something vaguely to do with extending HTTP and could offer FIOH a veneer of academic respectability. So REST was appropriated to give cover for what was really just FIOH.

I’m not saying that this is exactly how things happened, or that there was an actual conspiracy among irreverent startup types to misappropriate REST, but this story helps me understand how REST became a model for web service APIs when Fielding’s dissertation isn’t about web service APIs at all. Adopting REST’s constraints makes some sense, especially for public-facing APIs that do cross organizational boundaries and thus benefit from REST’s “uniform interface.” That link must have been the kernel of why REST first got mentioned in connection with building APIs on the web. But imagining a separate approach called “FIOH,” that borrowed the “REST” name partly just for marketing reasons, helps me account for the many disparities between what today we know as RESTful APIs and the REST architectural style that Fielding originally described.

REST purists often complain, for example, that so-called REST APIs aren’t

actually REST APIs because they do not use Hypermedia as The Engine of

Application State (HATEOAS). Fielding himself [has made this

criticism](https://roy.gbiv.com/untangled/2008/rest-apis-must-be-hypertext-driven).

According to him, a real REST API is supposed to allow you to navigate all its

endpoints from a base endpoint by following links. If you think that people are

actually out there trying to build REST APIs, then this is a glaring

omission—HATEOAS really is fundamental to Fielding’s original conception of

REST, especially considering that the “state transfer” in “Representational

State Transfer” refers to navigating a state machine using hyperlinks between

resources (and not, as many people seem to believe, to transferring resource

state over the wire).8 But if you imagine that everyone is just building

FIOH APIs and advertising them, with a nudge and a wink, as REST APIs, or

slightly more honestly as “RESTful” APIs, then of course HATEOAS is

unimportant.

Similarly, you might be surprised to know that there is nothing in Fielding’s dissertation about which HTTP verb should map to which CRUD action, even though software developers like to argue endlessly about whether using PUT or PATCH to update a resource is more RESTful. Having a standard mapping of HTTP verbs to CRUD actions is a useful thing, but this standard mapping is part of FIOH and not part of REST.

This is why, rather than saying that nobody understands REST, we should just think of the term “REST” as having been misappropriated. The modern notion of a REST API has historical links to Fielding’s REST architecture, but really the two things are separate. The historical link is good to keep in mind as a guide for when to build a RESTful API. Does your API cross organizational and national boundaries the same way that HTTP needs to? Then building a RESTful API with a predictable, uniform interface might be the right approach. If not, it’s good to remember that Fielding favored having form follow function. Maybe something like GraphQL or even just JSON-RPC would be a better fit for what you are trying to accomplish.

If you enjoyed this post, more like it come out every four weeks! Follow @TwoBitHistory on Twitter or subscribe to the RSS feed to make sure you know when a new post is out.

Previously on TwoBitHistory…

New post is up! I wrote about how to solve differential equations using an analog computer from the '30s mostly made out of gears. As a bonus there's even some stuff in here about how to aim very large artillery pieces. https://t.co/fwswXymgZa

— TwoBitHistory (@TwoBitHistory) April 6, 2020

]]>How to Use a Differential Analyzer (to Murder People)2020-04-06T00:00:00+00:002020-04-06T00:00:00+00:00https://twobithistory.org/2020/04/06/differential-analyzerA differential analyzer is a mechanical, analog computer that can solve differential equations. Differential analyzers aren’t used anymore because even a cheap laptop can solve the same equations much faster—and can do it in the background while you stream the new season of Westworld on HBO. Before the invention of digital computers though, differential analyzers allowed mathematicians to make calculations that would not have been practical otherwise.

It is hard to see today how a computer made out of anything other than digital circuitry printed in silicon could work. A mechanical computer sounds like something out of a steampunk novel. But differential analyzers did work and even proved to be an essential tool in many lines of research. Most famously, differential analyzers were used by the US Army to calculate range tables for their artillery pieces. Even the largest gun is not going to be effective unless you have a range table to help you aim it, so differential analyzers arguably played an important role in helping the Allies win the Second World War.

To understand how differential analyzers could do all this, you will need to know what differential equations are. Forgotten what those are? That’s okay, because I had too.

Differential Equations

Differential equations are something you might first encounter in the final few weeks of a college-level Calculus I course. By that point in the semester, your underpaid adjunct professor will have taught you about limits, derivatives, and integrals; if you take those concepts and add an equals sign, you get a differential equation.

Differential equations describe rates of change in terms of some other variable (or perhaps multiple other variables). Whereas a familiar algebraic expression like \(y = 4x + 3\) specifies the relationship between some variable quantity \(y\) and some other variable quantity \(x\), a differential equation, which might look like \(\frac{dy}{dx} = x\), or even \(\frac{dy}{dx} = 2\), specifies the relationship between a rate of change and some other variable quantity. Basically, a differential equation is just a description of a rate of change in exact mathematical terms. The first of those last two differential equations is saying, “The variable \(y\) changes with respect to \(x\) at a rate defined exactly by \(x\),” and the second is saying, “No matter what \(x\) is, the variable \(y\) changes with respect to \(x\) at a rate of exactly 2.”

Differential equations are useful because in the real world it is often easier to describe how complex systems change from one instant to the next than it is to come up with an equation describing the system at all possible instants. Differential equations are widely used in physics and engineering for that reason. One famous differential equation is the heat equation, which describes how heat diffuses through an object over time. It would be hard to come up with a function that fully describes the distribution of heat throughout an object given only a time \(t\), but reasoning about how heat diffuses from one time to the next is less likely to turn your brain into soup—the hot bits near lots of cold bits will probably get colder, the cold bits near lots of hot bits will probably get hotter, etc. So the heat equation, though it is much more complicated than the examples in the last paragraph, is likewise just a description of rates of change. It describes how the temperature of any one point on the object will change over time given how its temperature differs from the points around it.

Let’s consider another example that I think will make all of this more concrete. If I am standing in a vacuum and throw a tennis ball straight up, will it come back down before I asphyxiate? This kind of question, posed less dramatically, is the kind of thing I was asked in high school physics class, and all I needed to solve it back then were some basic Newtonian equations of motion. But let’s pretend for a minute that I have forgotten those equations and all I can remember is that objects accelerate toward earth at a constant rate of \(g\), or about \(10 \;m/s^2\). How can differential equations help me solve this problem?

Well, we can express the one thing I remember about high school physics as a differential equation. The tennis ball, once it leaves my hand, will accelerate toward the earth at a rate of \(g\). This is the same as saying that the velocity of the ball will change (in the negative direction) over time at a rate of \(g\). We could even go one step further and say that the rate of change in the height of my ball above the ground (this is just its velocity) will change over time at a rate of negative \(g\). We can write this down as the following, where \(h\) represents height and \(t\) represents time:

\[\frac{d^2h}{dt^2} = -g\]

This looks slightly different from the differential equations we have seen so far because this is what is known as a second-order differential equation. We are talking about the rate of change of a rate of change, which, as you might remember from your own calculus education, involves second derivatives. That’s why parts of the expression on the left look like they are being squared. But this equation is still just expressing the fact that the ball accelerates downward at a constant acceleration of \(g\).

From here, one option I have is to use the tools of calculus to solve the differential equation. With differential equations, this does not mean finding a single value or set of values that satisfy the relationship but instead finding a function or set of functions that do. Another way to think about this is that the differential equation is telling us that there is some function out there whose second derivative is the constant \(-g\); we want to find that function because it will give us the height of the ball at any given time. This differential equation happens to be an easy one to solve. By doing so, we can re-derive the basic equations of motion that I had forgotten and easily calculate how long it will take the ball to come back down.

But most of the time differential equations are hard to solve. Sometimes they are even impossible to solve. So another option I have, given that I paid more attention in my computer science classes that my calculus classes in college, is to take my differential equation and use it as the basis for a simulation. If I know the starting velocity and the acceleration of my tennis ball, then I can easily write a little for-loop, perhaps in Python, that iterates through my problem second by second and tells me what the velocity will be at any given second \(t\) after the initial time. Once I’ve done that, I could tweak my for-loop so that it also uses the calculated velocity to update the height of the ball on each iteration. Now I can run my Python simulation and figure out when the ball will come back down. My simulation won’t be perfectly accurate, but I can decrease the size of the time step if I need more accuracy. All I am trying to accomplish anyway is to figure out if the ball will come back down while I am still alive.

This is the numerical approach to solving a differential equation. It is how differential equations are solved in practice in most fields where they arise. Computers are indispensable here, because the accuracy of the simulation depends on us being able to take millions of small little steps through our problem. Doing this by hand would obviously be error-prone and take a long time.

So what if I were not just standing in a vacuum with a tennis ball but were standing in a vacuum with a tennis ball in, say, 1936? I still want to automate my computation, but Claude Shannon won’t even complete his master’s thesis for another year yet (the one in which he casually implements Boolean algebra using electronic circuits). Without digital computers, I’m afraid, we have to go analog.

The Differential Analyzer

The first differential analyzer was built between 1928 and 1931 at MIT by

Vannevar Bush and Harold Hazen. Both men were engineers. The machine was

created to tackle practical problems in applied mathematics and physics. It was

supposed to address what Bush described, in [a 1931

paper](http://worrydream.com/refs/Bush%20-%20The%20Differential%20Analyzer.pdf)

about the machine, as the contemporary problem of mathematicians who are

“continually being hampered by the complexity rather than the profundity of the

equations they employ.”

A differential analyzer is a complicated arrangement of rods, gears, and spinning discs that can solve differential equations of up to the sixth order. It is like a digital computer in this way, which is also a complicated arrangement of simple parts that somehow adds up to a machine that can do amazing things. But whereas the circuitry of a digital computer implements Boolean logic that is then used to simulate arbitrary problems, the rods, gears, and spinning discs directly simulate the differential equation problem. This is what makes a differential analyzer an analog computer—it is a direct mechanical analogy for the real problem.

How on earth do gears and spinning discs do calculus? This is actually the easiest part of the machine to explain. The most important components in a differential analyzer are the six mechanical integrators, one for each order in a sixth-order differential equation. A mechanical integrator is a relatively simple device that can integrate a single input function; mechanical integrators go back to the 19th century. We will want to understand how they work, but, as an aside here, Bush’s big accomplishment was not inventing the mechanical integrator but rather figuring out a practical way to chain integrators together to solve higher-order differential equations.

A mechanical integrator consists of one large spinning disc and one much smaller spinning wheel. The disc is laid flat parallel to the ground like the turntable of a record player. It is driven by a motor and rotates at a constant speed. The small wheel is suspended above the disc so that it rests on the surface of the disc ever so slightly—with enough pressure that the disc drives the wheel but not enough that the wheel cannot freely slide sideways over the surface of the disc. So as the disc turns, the wheel turns too.

The speed at which the wheel turns will depend on how far from the center of the disc the wheel is positioned. The inner parts of the disc, of course, are rotating more slowly than the outer parts. The wheel stays fixed where it is, but the disc is mounted on a carriage that can be moved back and forth in one direction, which repositions the wheel relative to the center of the disc. Now this is the key to how the integrator works: The position of the disc carriage is driven by the input function to the integrator. The output from the integrator is determined by the rotation of the small wheel. So your input function drives the rate of change of your output function and you have just transformed the derivative of some function into the function itself—which is what we call integration!

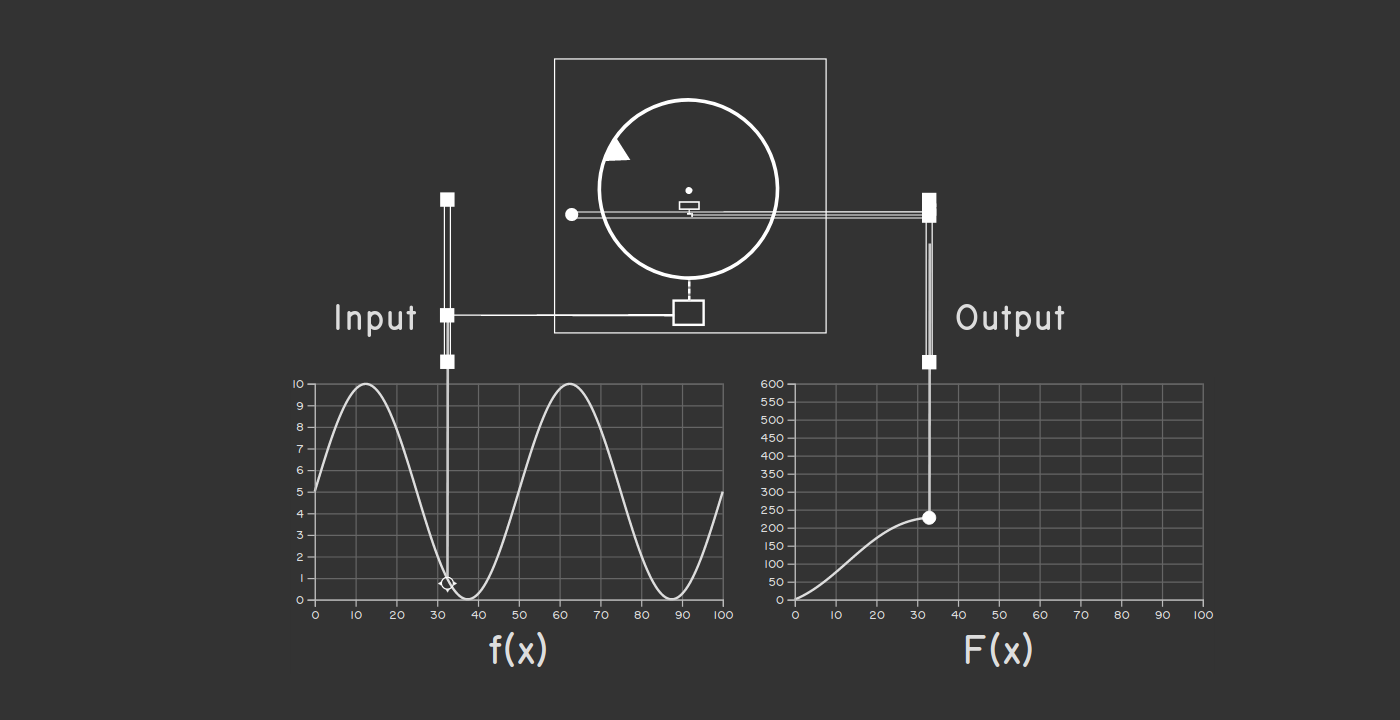

If that explanation does nothing for you, seeing a mechanical integrator in

action really helps. The principle is surprisingly simple and there is no way

to watch the device operate without grasping how it works. So I have created [a

visualization of a running mechanical

integrator](https://sinclairtarget.com/differential-analyzer/) that I encourage

you to take a look at. The visualization shows the integration of some function

\(f(x)\) into its antiderivative \(F(x)\) while various things spin and move.

It’s pretty exciting.

A nice screenshot of my visualization, but you should check out the real

thing!

A nice screenshot of my visualization, but you should check out the real

thing!

So we have a component that can do integration for us, but that alone is not enough to solve a differential equation. To explain the full process to you, I’m going to use an example that Bush offers himself in his 1931 paper, which also happens to be essentially the same example we contemplated in our earlier discussion of differential equations. (This was a happy accident!) Bush introduces the following differential equation to represent the motion of a falling body:

\[\frac{d^2x}{dt^2} = -k\,\frac{dx}{dt} - g\]

This is the same equation we used to model the motion of our tennis ball, only Bush has used \(x\) in place of \(h\) and has added another term that accounts for how air resistance will decelerate the ball. This new term describes the effect of air resistance on the ball in the simplest possible way: The air will slow the ball’s velocity at a rate that is proportional to its velocity (the \(k\) here is some proportionality constant whose value we don’t really care about). So as the ball moves faster, the force of air resistance will be stronger, further decelerating the ball.

To configure a differential analyzer to solve this differential equation, we have to start with what Bush calls the “input table.” The input table is just a piece of graphing paper mounted on a carriage. If we were trying to solve a more complicated equation, the operator of the machine would first plot our input function on the graphing paper and then, once the machine starts running, trace out the function using a pointer connected to the rest of the machine. In this case, though, our input is just the constant \(g\), so we only have to move the pointer to the right value and then leave it there.

What about the other variables \(x\) and \(t\)? The \(x\) variable is our output as it represents the height of the ball. It will be plotted on graphing paper placed on the output table, which is similar to the input table only the pointer is a pen and is driven by the machine. The \(t\) variable should do nothing more than advance at a steady rate. (In our Python simulation of the tennis ball problem as posed earlier, we just incremented \(t\) in a loop.) So the \(t\) variable comes from the differential analyzer’s motor, which kicks off the whole process by rotating the rod connected to it at a constant speed.

Bush has a helpful diagram documenting all of this that I will show you in a second, but first we need to make one more tweak to our differential equation that will make the diagram easier to understand. We can integrate both sides of our equation once, yielding the following:

\[\frac{dx}{dt} = - \int \left(k\,\frac{dx}{dt} + g\right)\,dt\]

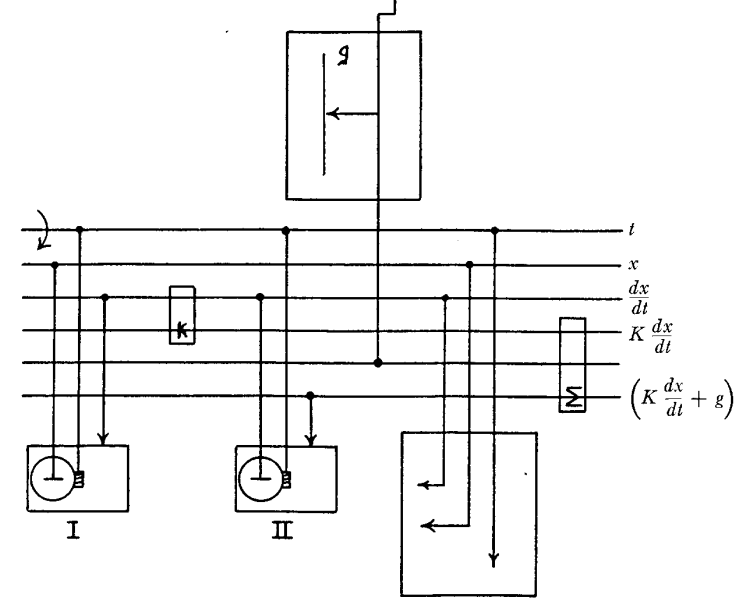

The terms in this equation map better to values represented by the rotation of various parts of the machine while it runs. Okay, here’s that diagram:

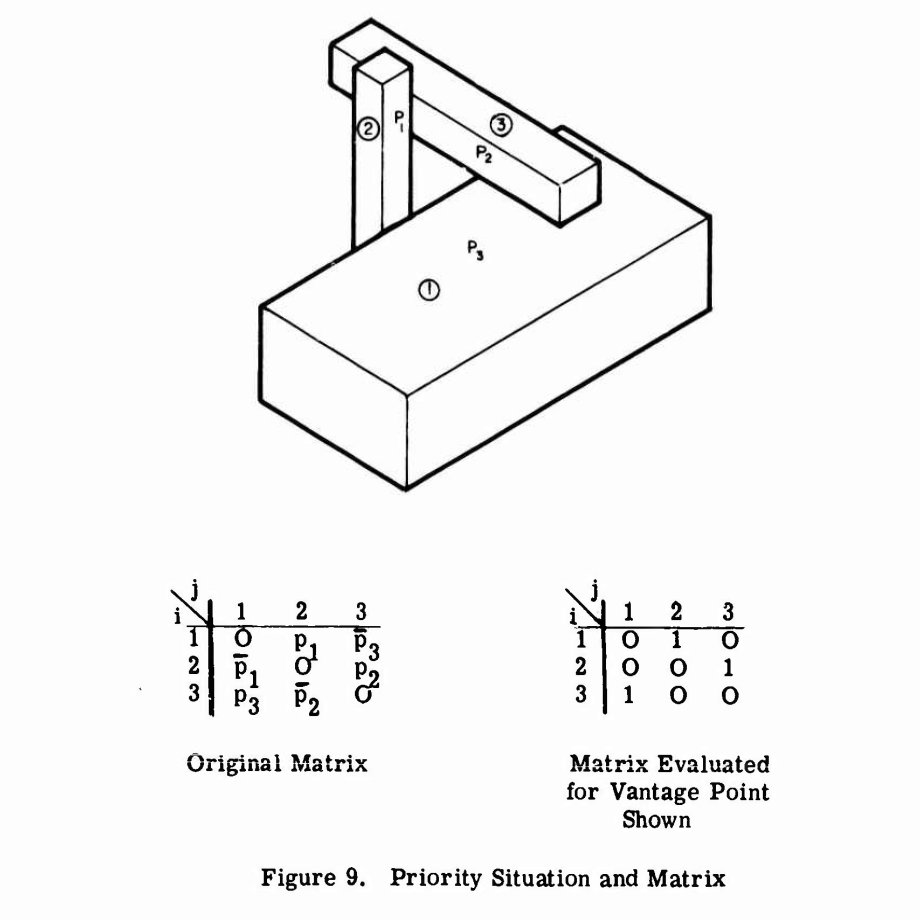

The differential analyzer configured to solve the problem of a falling body in

one dimension.

The differential analyzer configured to solve the problem of a falling body in

one dimension.

The input table is at the top of the diagram. The output table is at the bottom-right. The output table here is set up to graph both \(x\) and \(\frac{dx}{dt}\), i.e. height and velocity. The integrators appear at the bottom-left; since this is a second-order differential equation, we need two. The motor drives the very top rod labeled \(t\). (Interestingly, Bush referred to these horizontal rods as “buses.”)

That leaves two components unexplained. The box with the little \(k\) in it is a multiplier respresnting our proportionality constant \(k\). It takes the rotation of the rod labeled \(\frac{dx}{dt}\) and scales it up or down using a gear ratio. The box with the \(\sum\) symbol is an adder. It uses a clever arrangement of gears to add the rotations of two rods together to drive a third rod. We need it since our equation involves the sum of two terms. These extra components available in the differential analyzer ensure that the machine can flexibly simulate equations with all kinds of terms and coefficients.

I find it helpful to reason in ultra-slow motion about the cascade of cause and effect that plays out as soon as the motor starts running. The motor immediately begins to rotate the rod labeled \(t\) at a constant speed. Thus, we have our notion of time. This rod does three things, illustrated by the three vertical rods connected to it: it drives the rotation of the discs in both integrators and also advances the carriage of the output table so that the output pen begins to draw.

Now if the integrators were set up so that their wheels are centered, then the rotation of rod \(t\) would cause no other rods to rotate. The integrator discs would spin but the wheels, centered as they are, would not be driven. The output chart would just show a flat line. This happens because we have not accounted for the initial conditions of the problem. In our earlier Python simulation, we needed to know the initial velocity of the ball, which we would have represented there as a constant variable or as a parameter of our Python function. Here, we account for the initial velocity and acceleration by displacing the integrator discs by the appropriate amount before the machine begins to run.

Once we’ve done that, the rotation of rod \(t\) propagates through the whole system. Physically, a lot of things start rotating at the same time, but we can think of the rotation going first to integrator II, which combines it with the acceleration expression calculated based on \(g\) and then integrates it to get the result \(\frac{dx}{dt}\). This represents the velocity of the ball. The velocity is in turn used as input to integrator I, whose disc is displaced so that the output wheel rotates at the rate \(\frac{dx}{dt}\). The output from integrator I is our final output \(x\), which gets routed directly to the output table.

One confusing thing I’ve glossed over is that there is a cycle in the machine: Integrator II takes as an input the rotation of the rod labeled \((k\,\frac{dx}{dt} + g)\), but that rod’s rotation is determined in part by the output from integrator II itself. This might make you feel queasy, but there is no physical issue here—everything is rotating at once. If anything, we should not be surprised to see cycles like this, since differential equations often describe rates of change in a function as a function of the function itself. (In this example, the acceleration, which is the rate of change of velocity, depends on the velocity.)

With everything correctly configured, the output we get is a nice graph, charting both the position and velocity of our ball over time. This graph is on paper. To our modern digital sensibilities, that might seem absurd. What can you do with a paper graph? While it’s true that the differential analyzer is not so magical that it can write out a neat mathematical expression for the solution to our problem, it’s worth remembering that neat solutions to many differential equations are not possible anyway. The paper graph that the machine does write out contains exactly the same information that could be output by our earlier Python simulation of a falling ball: where the ball is at any given time. It can be used to answer any practical question you might have about the problem.

The differential analyzer is a preposterously cool machine. It is complicated, but it fundamentally involves nothing more than rotating rods and gears. You don’t have to be an electrical engineer or know how to fabricate a microchip to understand all the physical processes involved. And yet the machine does calculus! It solves differential equations that you never could on your own. The differential analyzer demonstrates that the key material required for the construction of a useful computing machine is not silicon but human ingenuity.

Murdering People

Human ingenuity can serve purposes both good and bad. As I have mentioned, the highest-profile use of differential analyzers historically was to calculate artillery range tables for the US Army. To the extent that the Second World War was the “Good Fight,” this was probably for the best. But there is also no getting past the fact that differential analyzers helped to make very large guns better at killing lots of people. And kill lots of people they did—if Wikipedia is to be believed, more soldiers were killed by artillery than small arms fire during the Second World War.

I will get back to the moralizing in a minute, but just a quick detour here to explain why calculating range tables was hard and how differential analyzers helped, because it’s nice to see how differential analyzers were applied to a real problem. A range table tells the artilleryman operating a gun how high to elevate the barrel to reach a certain range. One way to produce a range table might be just to fire that particular kind of gun at different angles of elevation many times and record the results. This was done at proving grounds like the Aberdeen Proving Ground in Maryland. But producing range tables solely through empirical observation like this is expensive and time-consuming. There is also no way to account for other factors like the weather or for different weights of shell without combinatorially increasing the necessary number of firings to something unmanageable. So using a mathematical theory that can fill in a complete range table based on a smaller number of observed firings is a better approach.

I don’t want to get too deep into how these mathematical theories work, because the math is complicated and I don’t really understand it. But as you might imagine, the physics that governs the motion of an artillery shell in flight is not that different from the physics that governs the motion of a tennis ball thrown upward. The need for accuracy means that the differential equations employed have to depart from the idealized forms we’ve been using and quickly get gnarly. Even the earliest attempts to formulate a rigorous ballistic theory involve equations that account for, among other factors, the weight, diameter, and shape of the projectile, the prevailing wind, the altitude, the atmospheric density, and the rotation of the earth1.